Unveiling Faces: Navigating the Path of Face Recognition with Machine Learning

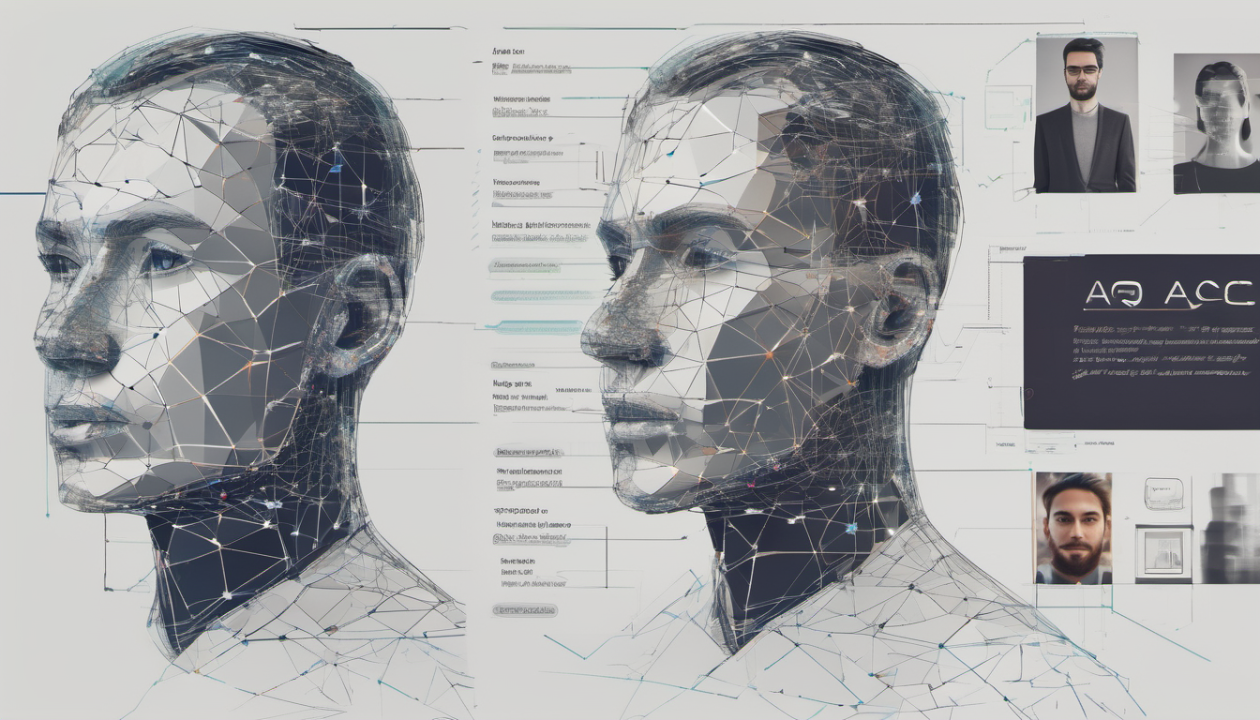

Face recognition, a pivotal application of computer vision, hinges on the adept utilization of machine learning (ML) algorithms. This technology empowers the identification and verification of individuals by scrutinizing and contrasting facial attributes extracted from images or video frames. The process involves several pivotal steps, each bolstered by specific ML algorithms.

Step 1: Face Detection

The first crucial step in face recognition is face detection, where the algorithm locates and extracts faces from images or video frames. Traditional methods like Haar cascades and Histogram of Oriented Gradients (HOG) are commonly used for this purpose. Haar cascades utilize a cascade classifier trained to detect faces based on predefined features, while HOG focuses on gradients of pixel intensities to identify facial regions.

Step 2: Feature Extraction

Subsequently, feature extraction ensues, aiming to encapsulate essential characteristics of each visage. Principal Component Analysis (PCA), Local Binary Patterns (LBP), and Linear Discriminant Analysis (LDA) are pivotal in this regard. PCA mitigates the curse of dimensionality by transforming facial data into a lower-dimensional space, while LBP characterizes texture and patterns. Additionally, LDA, often coupled with PCA, aims to maximize inter-class separability while minimizing intra-class variance.

Step 3: Classification

The pivotal task of classification follows, where extracted features are juxtaposed against known faces to ascertain individual identities. In addition to traditional classifiers like Support Vector Machines (SVM) and k-Nearest Neighbors (k-NN), ensemble models garner attention. Ensemble techniques amalgamate multiple classifiers, including SVMs, k-NN, and Logistic Regression, to enhance predictive performance by leveraging diversity and mitigating individual weaknesses.

Ensemble techniques leverage the diversity of multiple classifiers to mitigate individual weaknesses and improve accuracy.

-

Voting: In the ensemble method of voting, each classifier contributes to the final decision through a democratic process.

- Hard Voting: The class predicted by the majority of classifiers is selected as the final prediction.

- Soft Voting: Classifiers’ probabilities are averaged, and the class with the highest average probability is chosen.

-

Bagging (Bootstrap Aggregating): Multiple subsets of the training data are generated through resampling with replacement.

- Each subset is used to train a separate classifier.

- Predictions from all classifiers are combined to make the final decision.

-

Boosting: Iteratively trains weak classifiers, with each subsequent classifier focusing on the instances misclassified by its predecessors.

- More weight is given to misclassified instances in each iteration.

- Aim is to improve the overall performance of the ensemble model.

Step 4: Model Training and Evaluation

Model training, integral to the process, entails the optimization of parameters to refine the model’s ability to discern facial identities accurately. Furthermore, the emphasis on cross-validation supersedes mere training, ensuring the robustness of the model against varying environmental conditions. Techniques such as GridSearchCV facilitate hyperparameter tuning, enhancing model performance by identifying optimal parameter configurations.

Extending to Deep Learning:

Deep learning, a subset of machine learning, has revolutionized face recognition by leveraging deep neural networks to automatically learn hierarchical representations from raw data. Convolutional Neural Networks (CNNs) are the cornerstone of deep learning-based face recognition systems due to their ability to learn spatial hierarchies of features directly from pixel values.

Step 1: Convolutional Feature Extraction

In deep learning-based approaches, CNNs are employed for both face detection and feature extraction tasks. CNN architectures like VGG, ResNet, and MobileNet are pretrained on large-scale datasets such as ImageNet and fine-tuned for face recognition tasks. These networks learn to extract discriminative features from facial images through a series of convolutional and pooling layers.

Step 2: Facial Embedding

Instead of traditional feature extraction methods, deep learning-based approaches often employ facial embedding techniques to map faces into a high-dimensional feature space. Models like FaceNet utilize siamese or triplet networks to learn embeddings that preserve facial identity information while maximizing inter-class variations and minimizing intra-class variations.

Step 3: Classification and Verification

In the final step, facial embeddings are compared using similarity measures such as cosine similarity or Euclidean distance to perform classification or verification tasks. Deep learning models can directly output a similarity score between two faces, enabling efficient face matching and recognition. Additionally, techniques like softmax classification are used for multi-class face recognition scenarios.

Conclusion:

Face recognition, driven by both traditional machine learning and deep learning algorithms, has evolved into a powerful technology with applications in security, surveillance, authentication, and personalization. While traditional methods offer robustness and interpretability, deep learning approaches provide unparalleled accuracy and scalability, paving the way for transformative advancements in the field of computer vision. As research in this area continues to progress, we can expect further improvements in face recognition systems, making them more reliable and accessible for diverse real-world applications.

The Role of Data and Challenges

In the realm of face recognition, data plays a paramount role, serving as the foundation upon which models are built. However, challenges such as the curse of dimensionality pose significant hurdles, necessitating judicious feature selection and dimensionality reduction techniques. Moreover, data preprocessing steps, including normalization and augmentation, play a pivotal role in ensuring the quality and diversity of training data, thereby enhancing model generalization and performance.